- Learn k6(JS) with me!

- Necessary setup

- How I trained

- Tasks and solutions

- The base script

- Task 1: Override options

- Task 2: Run a test using stages

- Task 3: Output time-series metrics to JSON.

- Task 4: Use the HTTP API

- Task 5: Set custom metrics

- Task 6: Modify k6 reporting

- Task 7: Modularize scripts

- Task 8: Log execution-context variables

- What I learned (besides some JS)

- About the process of scripting

- About using docs

- About the process of learning

- Just one more thing...

I'm the technical writer at k6. If you're on this site, you probably know what k6 is. But, to reiterate the essentials:

- k6 is a load-testing application.

- k6 load-test scripts are written in pure JavaScript.

When I started five months ago, I knew I had some work to do because:

- I barely knew what load testing meant,

- I'd never written a line of JavaScript.

Really, my "professional programming experience" culminated in some shell two-liners.

Fortunately, the k6 team gave me a set of challenges to get up to speed. In this article, I go over the eight challenges the k6 team gave me and present the ways I solved them (I don't promise elegance).

Besides helping me learn k6 and JS, the challenge was also a great way for me to experience the k6 documentation through the eyes of a developer. In the final section, I spend a little time discussing what I learned about learning.

Learn k6(JS) with me!

This post applies JS basics to basic k6 functionalities

For detailed k6 practice, check out the k6 workshop.

From docs, community discussions, and chats with engineers, I had a good sense of k6 capabilities. But I couldn't implement any test logic, as I couldn't write JavaScript. You might be in the opposite situation—perhaps you know how to write JavaScript but not how to work with the k6 API.

If you want to do your own k6 challenge, I put my solutions in collapsible sections.

Necessary setup

You should be able to copy and paste these solutions and run them on your machine. Every request goes to k6 test servers, which you can load as heavily as you want. To reproduce the solutions, this is what you need:

- k6 installed

- A human ability to use the command line and write files

- (Optional) a k6 Cloud account to visualize results

- (Optional) jq installed to filter some JSON files.

I used k6 v0.39.0, the latest version at the time of writing.

How I trained

As I said, I don't have any real programming experience. Here's how I've been getting up to speed:

- Watching Jonas Schmedtmann's Udemy course

- Reading and rereading the first few chapters of Eloquent JavaScript (some stuff is sinking in)

- Reading the great javascript.info and the great MDN JS docs

- Reading and editing a lot of k6 documentation (and writing a little, too).

I do have experience talking to programmers and writing for programmer audiences.

This is to say that I did have some theoretical knowledge, though my practical experience was nil null.

Tasks and solutions

These tasks mostly build on each other. I tried to be minimal without being trivial. It wouldn't have been much fun to just copy the documentation and run it. I wanted to make scripts that I thought approximated real-world use.

I also try to provide a little context for certain key features.

The base script

For the base script, I used the example from the Running k6 document. There's not much to say about it:

- It imports the modules needed to run the script logic—in this case, it needs to make HTTP requests and simulate user pauses.

- The default function runs the script—in this case, a virtual user makes a GET request, then sleeps for one second.

Though I modify the logic and the target URI a few times, this minimal script is the foundation.

Task 1: Override options

Goal: Understand the different ways to configure k6 options

Docs: k6 Options

This was a good warm up. I knew about the k6-option lookup order, because I keep changing the diagram. (I can't ever decide how to represent precedence: with equality operators? through arrows? through some sort of vertical and horizontal positioning?)

Use the basic script to see default VUs, duration, and iterations.

The basic script has one virtual user (VU) run the default function for one iteration:

Override defaults in exported script options

Now, I can add options to the script in an options object.

Override them using environment variables

This script still has exported options, but the environment variables override them.

Override them using command-line flags

I'm keeping the environment variables in to emphasize the "override" part of this challenge.

Task 2: Run a test using stages

Goal: Understand the stages shortcut.

Docs: The stages option

Stages are a k6 option that can ramp the VU throughput up and down.

Again I'm trying for minimal viable examples:

At first, it looks strange to me to see the same object in back-to-back lines:

But this duplication is not actually duplication: the first line ramps VUs up to a target of 10 in 10 seconds; the second keeps VUs at that level for 10 seconds more.

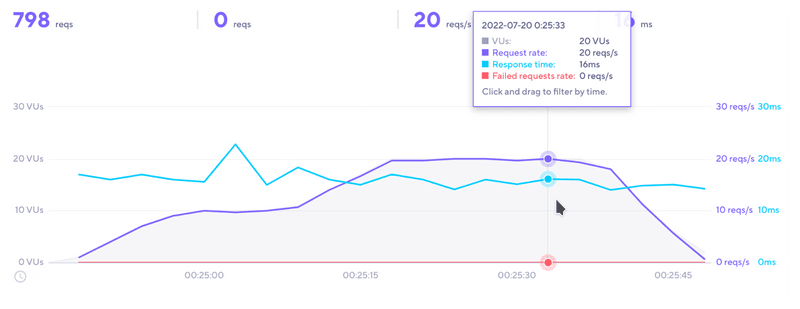

I guess it worked, but I'm not sure how to check. Visualization would confirm the ramping structure...

Run the same test on the cloud and visualize the ramping

Overall, I expect that stages that have these values:

...should have a request-per-second throughput that looks something like this:

Let's see:

Task 3: Output time-series metrics to JSON.

Goal: Understand the difference between time-series data and end-of-test reports.

Docs: Results output

This also was pretty much a warmup. Test results can be either summary statistics or time series of all generated metrics. To get the granular time series, use the --out option.

Select the JSON metrics tab to see a (very small) sample of what the metrics look like. I added some iterations, since a single point isn't much of a time series.

Tests generate a lot of metrics! In the JSON data, each request has a point object for each metric, with its timestamps and timing values.

Task 4: Use the HTTP API

Goal: Get more familiar with HTTP API.

Docs: HTTP requests, HTTP API, Tags and groups

For these and most following tasks, I switched the target URL to https://test-api.k6.io, a demo REST API that views, creates, and deletes JSON representations of crocodiles.

First I needed to make multiple requests. I know I could just add 2 URLs. But I thought it would be more fun to iterate over all crocs returned. It took some trial and error, but I came up with this:

noteThe trailing slashes save a redirect.

This lowers the test time just a bit. I did not discover that myself. A developer told me after I proudly presented the "bug" I thought I'd found.

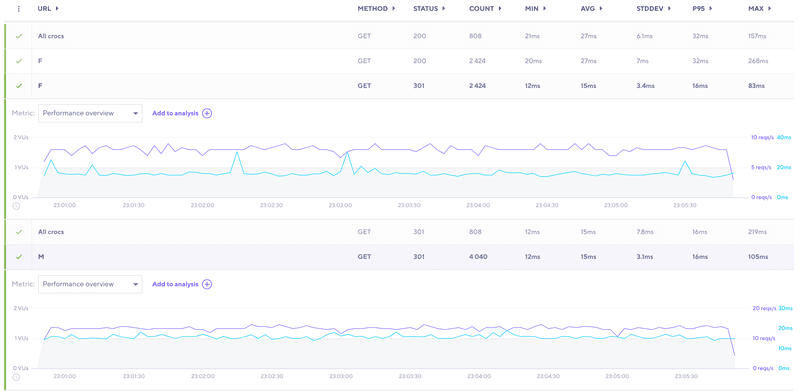

Tag particular requests and filter them on k6 Cloud

Even or odd seems like a gimmicky tag.

But that's okay, it worked. With Tags, users can filter results to custom subsets. Here, I use jq to get only results for even-tagged crocs.

Group dynamic URLs and visualize the test on k6 Cloud

By default, each requested URL is going to have its own tag. This can create a big mess with dynamic URLs.

Of the crocodile objects, the sex property was the only one that had repeated values. That seems like a good candidate for a name.

Now, to run it on the Cloud:

Task 5: Set custom metrics

Goal: Get more familiar with Custom Metrics.

Docs: k6 metrics

This time, I had to dig into the docs. I couldn't figure out what a good metric would be or how I was even supposed to find data to make a metric from.

I looked at the example and noticed a timings object. I assumed these timings were built into JavaScript, like forEach (Now, from a distance of a few weeks, I can look back and laugh at how naive I was).

I then discovered that k6 has a Response module whose data k6 uses to create metrics. I wondered why the metrics page wouldn't mention that. Then I noticed a truncated reference for the Response object, in a table on the same page that I'd been looking at. I scrolled past this table at least ten times, and it's not a long page.

I should be embarrassed by that, but more I wonder whether I can make the docs clearer here. Like most people, I scan up and down a page, looking for what I need. A good document should respect that people rarely read whole pages.

Create one or two types of custom metrics (Counter, Gauge, Trend, Rate)

I decided to make two metrics:

- A gauge to save a maximum value

- A trend to make a p99 value

The gauge wasn't hard, but it took me so long to make a trend metric that didn't seem trivial. Finally, I came up with the idea of making a differential between sending and receiving time. Maybe this is still trivial, but I think it works (in the sense that it doesn't throw errors or emit evidently junk data).

noteThis is where I start to get embarrassed by my code. I felt queasy about mutating the response object, and I should set the URL as a variable; I made three different names about timing and none seemed right.

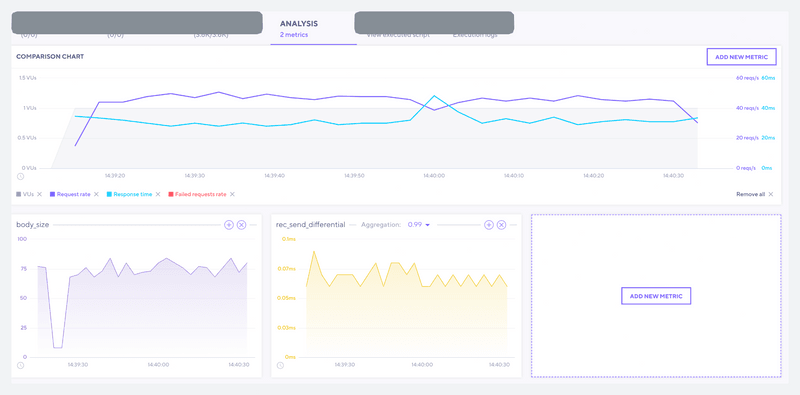

See the results on the console and Cloud

Results on the console:

Look for rec_send_differential:

Define a Threshold on the p99 of a custom metric.

Validate a Pass and a Failure.

Thresholds set up pass/fail conditions for the test based on metrics. I see two ways to fail a threshold:

- Load a system until it buckles

- Hold the system to an unrealistically high standard.

Task 6: Modify k6 reporting

Goal: Get familiar with stats and reporting in k6.

Docs: End-of-test-summary, Summary-trend stats option

Change summary output with the SummaryTimeUnit and SummaryTrendStats

I didn't know about these options, but they are convenient ways to change test-result precision. Again, I'm adding iterations and VUs, because p-values in a single-value dataset are almost literally "pointless."

Use handleSummary and log the summary on a local JSON file.

The handleSummary() function creates a custom report of end-of-test stats. I dreaded this one, because handleSummary() confused me when I first read about it in the docs (I made an issue).

I knew that the handleSummary() function in theory processes the results output, but I could not figure out where to start.

But, after I tried the example from the docs, I realized this wasn't so difficult. The function "comes" with a variable, data, which represents the summary report in JSON. Still, there's no way a reader could make a custom summary without experimentation. The docs have some gaps to fill here.

I went overboard with my handleSummary() report. As a technical writer, the idea of automatically structuring documents appeals to me. I have no idea whether this is an appropriate way to make an HTML template. But it works.

If you want to test this, open the summary.html folder that's generated in the directory where you run the test.

I feel like this code is bad.

I'm pretty sure that I should use this to make the way I reference objects more general. But I already spent an unreasonable amount of time making this template, so this is all for now.

Task 7: Modularize scripts

Goal: modularize your tests.

Docs: Modules

Move some logic to a JS function within the test script

I already did this with my metrics.

Move the function to a local module in a separate file

Immediately I could see the appeal of this approach: extract each piece of logic to its own function, then mix and match scripts.

This code didn't run at first, because I didn't import the http module into the helper function. It makes sense in retrospect: the helper logic calls the module.

Extend your module with more functions

I thought my handleSummary was the best function to import since it was so long. I made a new function called htmlSummary(), then called it in the main script's handleSummary() function.

I couldn't understand why it didn't work. Finally, a friendly developer helped me: to return data, one must return data.

Import a remote module from JSLIB and use it on your script

Jslib is a set of utils for k6.

I decided to make new test logic that would create a random user.

I was able to generate random data, but I lost a lot of time fiddling with the POST method from the http module. Finally I used the httpx module and it worked.

This is definitely an area where the docs need to improve: the "experimental" httpx module is used in POST examples all over the docs, and the "vanilla" http module is used less. Either the http module needs more example documentation, or the httpx methods should be designated as stable and recommended.

Task 8: Log execution-context variables

Goal: Understand execution-context variables.

Docs: Execution context variables, Cloud tests from the CLI

- Log variables to the console.

- Print all of them when running a cloud test distributed across multiple

- Print all the Cloud Environment variables

For this last one, it'd be tedious to show different logs. How about a function to do it all at once?

As this test uses cloud execution variables, some variables will be undefined if you run this locally.

noteIt would make a cleaner script to extract this logger to its own function. But, once again, I couldn't get the data to show when I imported it as a module. I suspect the issue was about failing to return data again.

What I learned (besides some JS)

Challenges are great ways to learn an application. They should be used more often in onboarding and in training. The process taught me a good deal not only about k6 but also about the experience of learning to use k6.

I'm unusual in that I read much more documentation than I write code. As I did this challenge, I tried to be aware of how I navigated the k6 information environment, and I tried to think about how a developer would experience these docs in a time-crunched professional environment.

These are the lessons that I hope stick with me.

About the process of scripting

Here's what I learned after a week of pretending I was a performance engineer:

- It's easy and fun to combine functions.

- Debugging starts out being fun, then gets very tedious.

- There's a lot of fiddling and trial and error to write a decent script.

- Refactoring can be procrastination.

- Working on the internet involves a lot of uncertainty.

Some points I'd like to expand on:

Refactoring can be procrastination.

I started to find many places where I was reusing functions and variables. At first, my inclination was to DRY everything out. After a while, I realized I'd been spending a lot of time on something that was ultimately for a blog post.

Working on the internet involves a lot of uncertainty.

The information about work on the internet is part of an open group. To learn deeply about one thing, you must learn how to interface with another thing, which probably requires understanding the behavior of something else, and the loop never closes.

If I look at the HTML living standard, I theoretically could memorize everything. I probably could receive a specification for an HTML document and make it by just reading the docs.

But as soon as I would try to send that HTML over the internet, a huge new problem set would emerge: protocols, APIs, network particularities, etc. It's impossible to study enough to know everything. The only practical way to know the dozens (hundreds? (millions?)) of particularities is through experience and direct encounter.

For example, I encountered a problem where trailing slashes cause redirects. I'm sure this information is out there, I'm sure it's well-known, I'm sure it's even taught, but how is anyone supposed to know that if they don't encounter the information in real life? Who would ever syllogistically reason this information out from a set of first principles? And does the internet even have a set of first principles?

Some people probably have brains that are naturally wired for network programming. Not me. So, I need to explore the space to find all its edges.

About using docs

I also discovered many places where the docs caused me unnecessary friction.

Examples that are too general don't help beginners.

Procedures should not have implicit steps.

(unless the audience really should already know).

Learning is non-linear. Though none of the JavaScript material I've looked at has covered making HTTP requests yet, I felt like I had a pretty good idea of what to do, because I'd spent time documenting REST APIs.

Yet, other more fundamental aspects of JS—such as returning values—perplexed me multiple times.

Good docs hugely reduce tedium.

It doesn't matter how advanced you are: when you come to a new technology, you come to a new technology.

Walking a mile in a developer's shoes (sitting a non-mile in a developer's chair?) showed me how much it helps to have a little context and usable examples. When the examples were specific, solutions came easily. When the examples were fragmentary or overly general, a period of trial and error ensued.

About the process of learning

As much as I learned about JS and k6, I may have learned more about the experience of learning itself. I hope that this knowledge will help me write better technical content.

Skills take time to learn.

This challenge took me a long time. Without all the practice from the step-by-loving-step Udemy course, it would have taken much longer.

High-level understanding underestimates much necessary ground-level work.

I've been reading about networks, programming, and operating systems for a long time (though mostly superficially). Lately, I've also been reading and watching lots of material on JavaScript and its theory.

I've also been reading the k6 docs very closely. Some parts of the docs I probably know better than anybody. When I looked at the challenge problems, I smirked at my screen and thought, this will be easy.

I knew where to find all the information. I had a general idea of the necessary programming logic. If you'd asked me, I probably could have given you a pretty plausible prose overview of each solution.

But once I started, I discovered implementing required a lot of details I was fuzzy about. And figuring out these details took the vast majority of solution time.

So much application of skill is intuitive and tacit.

Principles of good design only get one so far. For example, I know it's good Unix design to do one thing and do it well, but what is the one thing I'm supposed to do? How do I make it do it well?

Similarly, I know that good technical document design starts with the most important information. Whether I do this or not is one thing, but I try to. Yet, I certainly never vocalize how I design a document to myself. I just rely on intuition. I have principles, I believe in them, I edit texts using them, I sometimes make post-hoc justifications on the grounds of them, but in the moment of creation, I'm too busy writing to think.

Practice deepens understanding, and deeper understanding makes more meaningful practice.

Every time I returned to Eloquent JavaScript after practicing, I discovered that I understood more. When I started scripting, I began to remember to apply knowledge that I conceptually understood, like encapsulation and scopes.

Unfortunately, docs don't traditionally facilitate practice. If you're lucky, there's a tutorial, and that's it.

Docs should do more to facilitate both practice and understanding, and makers should spend more time supplementing material with things like courses and workshops. (P.S. there's a k6 workshop).

Just one more thing...

Finally, one more important life lesson about programming:

It's easier to debug a JS script if you ask people who helped build the JS engine.

True, k6 core developers aren't the maintainers of Goja. But some have made a substantial number of commits. I tried not to abuse their time, but I did ask three questions. That was a big advantage.

Thanks to everyone who helped out!

- Learn k6(JS) with me!

- Necessary setup

- How I trained

- Tasks and solutions

- The base script

- Task 1: Override options

- Task 2: Run a test using stages

- Task 3: Output time-series metrics to JSON.

- Task 4: Use the HTTP API

- Task 5: Set custom metrics

- Task 6: Modify k6 reporting

- Task 7: Modularize scripts

- Task 8: Log execution-context variables

- What I learned (besides some JS)

- About the process of scripting

- About using docs

- About the process of learning

- Just one more thing...