Recently AWS made the new ARM processors for Lambda functions generally available. With that change Serverless functions now use Graviton2, said to offer better performance at lower cost.

I built a sample API on AWS using API Gateway and Lambda, and I wrote two endpoints, one CPU-intensive (calculating Pi using Leibniz's formula), the other a typical data transfer endpoint (returning an arbitrary number of bytes). Two very different endpoints for my experiment.

One hard limit on the size of my experiment was that my personal AWS account allows me no more than 50 unreserved concurrency, which roughly means I cannot have more than 50 Lambda instances running in parallel. However, you don't need big numbers in order to gain insights.

The tests

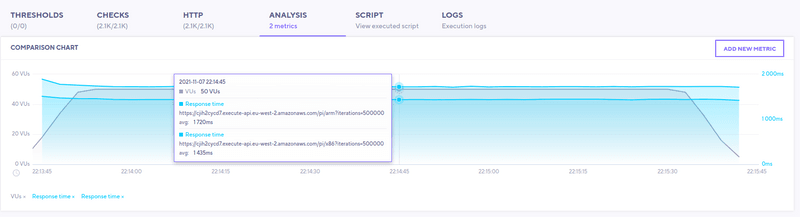

I deployed both endpoints in both x86_64 (Intel) and arm64 (Graviton2). The tests were largely the same, ramp up type tests. For the CPU-intensive one I used a large number of iterations of the Leibniz formula (half a million to be precise). The data tranfer one requested 100 kilobytes. The CPU-intensive test:

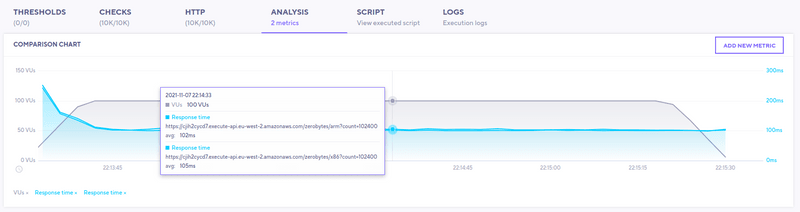

And the data transfer test:

Results (data transfer)

The test results for the data tranfer endpoint were roughly what I had expected. Little difference between the two architectures, seeing that the endpoint doesn't really push the CPU to do much.

The one interesting thing to note though is that the response time starts at 250ms before stabilizing at 100ms. This is typical for Lambda cold start, Lambda cold start lays within the ~100/200ms range. When you first trigger a Lambda function, AWS needs to spin up the infrastructure underneath, likely a container. Once you have your instances running, all your subsequent requests take much less time.

Results (CPU load)

For our CPU-intensive endpoint again we see that cold start, although the graph isn't as steep as in the previous example, due to regular response time being much bigger in comparison. What's interesting here though is that x86 is 20% faster than the new chips, which is exactly the opposite of what AWS claims.

Results

What I learned from this experience is that while AWS boldly claims arm is 20% faster and 20% cheaper, the results of my experiment say it's rather 20% slower and 20% cheaper. This might vary based on the chip instructions you use, this implementation of the Leibniz formula consists of loops, assigments, additions and other basic mathematical operations. But it turns out others are seeing similar results, like the folks at Narakeet.

That's not bad news though. Non-CPU workloads still benefit from the price at no speed trade-off. Arm also draws less power, which is better for the planet.

While the experiment covers Lambda, these findings could be extrapolated to EC2.

The concurrency (number of lambda instances running at the same time) roughly equals the number of requests per second you're running for your API, multiplied by the response time in seconds. It'd be interesting to monitor how this behaves over time and with higher load.