Tl;dr — Load Impact is growing, and one of the challenges with growth is making sure your infrastructure and development cycle mature with that success. This is Part 1 of our series, "The Road to Microservices.” Here’s what we’ll cover:

- Why we decided to make the change to a microservice-oriented approach

- We’re switching to Docker! (Isn’t everyone, at this point?)

- What we expect to get out of these changes

Sometimes you have to redesign your software and maybe even the environment in which it runs. This need can arise for many different reasons: Anything from mistakes in the original assumptions, to growth or an opportunity to pivot your business.

Oftentimes, you begin with a prototype when writing completely new software. If that prototype proves to be a success, you might simply start building on top of it and extending it ad hoc to fill new demands (if you are screaming at me right now, trying to tell me it’s not how things are done, all I can say is this is what I’ve seen time and time again).

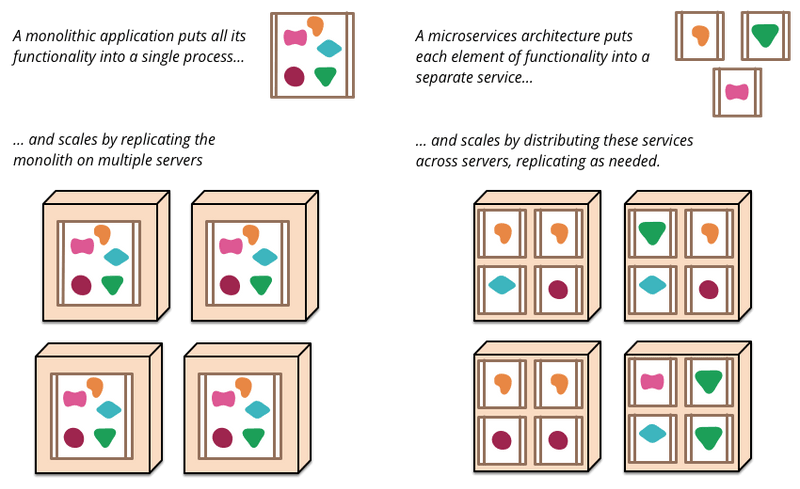

You will eventually identify code to put in separate modules or libraries to enable the sharing of code and mitigate duplication as you go along. The risk of this approach is you might end up building a monolithic code base that has too many responsibilities and tries to do too many things.

Working in a single code base might work if you are two or three people in the same room, but as soon as you get bigger the risks will increase. If every piece of software in your stack share a big library, for instance, you’ve essentially created a single point of failure.

A bug in that library will affect your whole stack, and you definitely don’t want that..

Keeping everything in check in this kind of code also demands central responsibility. Usually the original authors have to act as maintainers and bear the responsibility of code reviews and planning for further development as the team grows. Most likely they are the only people with a full view of how everything interconnects.

Deploying code like this into production can be a nightmare, making releases few and large, which is the opposite of what you want.

At Load Impact, the team is no longer small or confined to a single location. We are a distributed team and the way we work (and the structure of our software, to some extent) needs to reflect that. One of my first assignments at Load Impact has been to overhaul a particular piece of our backend code. It’s a pretty complex piece of software that absolutely does too many things.

To enable a faster development and release cycle we’ve decided to split this project into several smaller ones. We decided to convert this into a microservices-oriented approach to be deployed as Docker containers.

This wonderfully descriptive image is from Martin Fowler of Thoughtworks

This type of architecture is probably where containers are the most obvious fit because the whole idea is lightweight and isolated processes. Hopefully this also means it’ll be easier for new developers to dig into these smaller units of code.

Developing is one thing, but another thing to consider is the environment where code will be deployed. Introducing Docker to our environment means we have to be able to run containers in our production environment, preferably in a clustered and fail safe way.

We use Amazon AWS for most of our production environment, so we started looking into ECS for this. Our Amazon environment has also evolved over time and there are really only a handful of people who know how all the pieces fit together.

While looking at how to implement and deploy these changes we ended up with two separate projects — one for the code and one for the infrastructure. We decided to take a new approach to our AWS environment and use a tool to automate the creation and change management. Apart from making everything automated, a great side effect of this is having everything documented in configuration files.

In a series of blog posts (this is the first!) I will go through the process, challenges and results of refactoring a big chunk of code and applying the necessary infrastructure automation to get everything running and easier than ever to work with both for operations and developers.

While it’s taken quite some work to get things going, I hope the lessons learned will be useful for everyone, and we’re looking forward to sharing this journey with as many people as possible!