As of k6 v0.27, there is now native support for constant arrival rates, making the approach described in this article obsolete. Please visit the new article that explains how to generate a constant request rate in k6 with the new scenarios API.

Overview

There are two different categories of tools in the load testing ecosystem:

- Non-scriptable tools

- Scriptable tools

The first category is called non-scriptable tools and are usually used for load testing either a single endpoint or a set of endpoints. These tools usually generate load using a constant rate, which is measured in requests per unit of time, usually seconds. These non-scriptable tools don't apply any logic to the load testing process, other than the generation of load.

In contrast, the second category is scriptable tools. Since they support scripting, they can apply some form of logic to the execution of a load test. The scripting languages vary between the tools, from declarative languages like XML to scripting languages like Lua and JavaScript. Such tools are used to create scenarios and execute a user flow to simulate how the user behaves while using the system under test.

k6 fits well in the second category, where it uses JavaScript to allow testers to create a scenario and implement their own logic. Because of this, simulating the hammering approach of non-scriptable tools is a little bit of effort to prevent race conditions. In short, it requires the tester to add a bit of sauce (read: work-around) to make it work efficiently.

We are working on a new set of changes to help our tool fit into both categories. The #1007 pull request is going to introduce seven new types of executors (test runners) that can handle almost all sorts of load tests.

Calculating RPS with k6

With k6, you can test in terms of requests per second (RPS) by limiting how many requests each VU is able to make per unit of time. The formula to calculate the RPS is as follows:

Request Rate = (VU * R) / T

- Request Rate: measured by the number of requests per second (RPS)

- VU: the number of virtual users

- R: the number of requests per VU iteration

- T: a value larger than the time needed to complete a VU iteration

The above formula has can be thought of as a way to calculate the required number of virtual users you need for your script. The number of requests R are already known to you, since you have already defined them in your script. Then you need to calculate the time T needed to complete a VU iteration. Suppose you have 10 requests per virtual user, and you expect each to take 0.5s to complete (http_req_duration). The overall time T to complete the whole VU iteration is the number of requests multiplied by the supposed round-trip of requests/responses. It is also good practice to add one or more seconds to T to account for delays.

T = (R * http_req_duration) + 1s →

T = (10 * 0.5s) + 1s = 6s

Now that you know the values for R and T, you need to decide on the RPS you want to achieve. Suppose you want to achieve 1000 RPS, i.e. you want your system to handle 1000 requests per second. Given the previous formula:

Request Rate = (VU * R) / T

The VU calculation formula is:

VU = (Request Rate * T) / R →

VU = (1000 * 6) / 10 = 600

Generating Constant Request Rate

The following is the script that helps with handling constant request rate. Let's test our previous calculation:

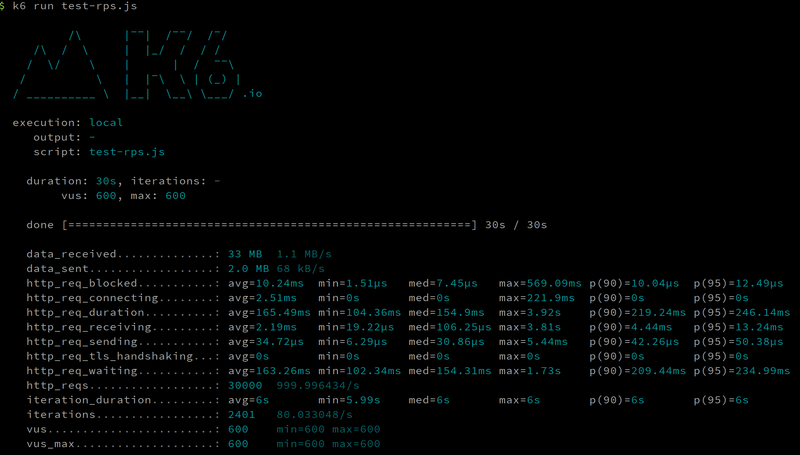

As you can see the http_reqs field in the output below, 30000 requests have been made in the period of 30 seconds. The average request rate is roughly 1000 requests per second.

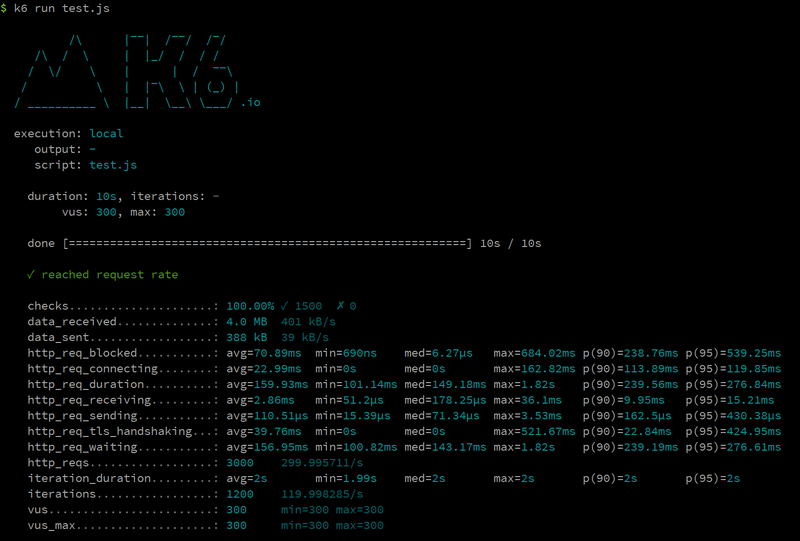

In the second example, we want to achieve 600 RPS. We have two requests (R = 2) and we set the time to T = 2. Note that the check on line 19 makes sure that you reach the desired request rate per VU. If the check fails to acknowledge that all remainders are bigger than zero, i.e. 600 RPS has not been reached, it will show failure when the test finishes. The same calculation we did above can be used like this:

VU = (300 * 2) / 2 = 300

Since we have reached 300 RPS, the check is passed (shown below with a check mark in green) and in total, 3000 requests has been made over the course of 10 seconds.

Conclusion

The normal way to define load in k6 is using VU. But as you've seen in the formulas and examples, it is possible to generate a reasonably constant request rate with k6, by limit the number of requests it can make over a period of time. The mechanism is to introduce sleeps in between VU iterations to pause execution, until the time difference passes. The check will indicate whether the test is reaching the desired request rate or not. The method described here would work both locally and on the cloud and provides an almost constant request rate among a set of requests.