With version 0.41, k6 has changed how it handles, stores, and aggregates the data it collects in the context of a load test. Most users probably won't notice any immediate difference. Yet, we expect these changes will empower us to make k6 more performant and easier to integrate into the larger observability ecosystem.

Until version 0.40, k6 took a custom approach to collecting the data to calculate test results. This new version redesigns the internals of k6 to follow a model closer to what exists in more traditional time-series databases such as Prometheus. This model, which we call Time Series, keeps track of events over time by storing and indexing their value according to sets of labels or tags. They make storing, indexing, and querying discrete series of timestamped values easy, efficient, and convenient. It also lets us be more efficient and, more importantly, eases the integration of k6 test results in Prometheus and with the larger Grafana observability ecosystem.

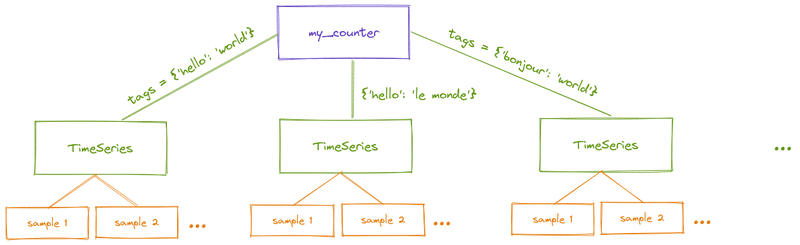

The k6 data model

From a user perspective, metrics track the performance of the operations that VUs perform in a test. Metrics can be either system ones, pre-defined and always collected by k6, or custom, defined and fed by the user. The various APIs available to the user during their test runs collect, compute, and hold aggregated representations of the observed performance of specific aspects or components of the system over time.

For instance, performing an HTTP call generates a couple of HTTP-related metrics, such as http_reqs, http_req_duration, or http_req_failed. Additionally, users might define tags on their operations, leading the affected metrics to classify their results based on their specific context. Metrics need to store and aggregate samples in a way that preserves the classification that users apply through tagging to produce these meaningful results.

Example

As a demonstration of the data model, consider the following script:

As expected, we would observe one sample per tag value in the output:

A new data model compatible with Prometheus

k6 used to track the performance of tagged operations by relying on a sub-par, computationally expensive technique to map collected samples to their metrics. Samples collected by k6 would each hold a copy of the tags. Furthermore, as we worked on improving support for Prometheus as a k6 output, we found that this model involved costly conversions to map our internal data model to one that Prometheus could ingest.

The latest k6 version thus revamps our internal data-model implementation and switches to a Time Series model, similar to how modern time-series databases store and represent data. Each metric now stores and aggregates data to and from a set of internal time series with the advantage of being more memory efficient and leading down the road to a more straightforward and more efficient integration with Prometheus. If you're interested in the details, you can read more about it in our GitHub issue dedicated to the topic.

Finer-grained contextual metadata

Beyond facilitating further integration with Prometheus and its performance properties, this new data model will allow for more efficient storage and isolation of measurements, mainly when used with tags. Although it was already the case before, this recent change in our data model surfaced the impact of tags on the performance and resource usage of k6 even more. As users tag their operations, k6 maps samples to Time Series for each respective metric to track the observed values of each tag values.

For example, take the tagging of two HTTP GET calls, one to a staging service and one to a production service. One uses the {'environment': 'staging'} tag, and the other the {'environment: 'production'} tag. This would create two dedicated time series storing the results for each metric that observe the effects of HTTP calls.

Thus, like with Prometheus, when users define many different tags with values exhibiting high cardinality (many different possible values) characteristics, the number of time series created and managed by k6 grows fast.

To mitigate that, we have added the underlying capability for k6 to add contextual metadata, which won't lead to the creation of time series tracking them. This capability is not yet directly accessible from user scripts, just from the k6 core modules and from xk6 extensions. As k6 itself defines tags on specific operations, to further improve our memory management, we have transformed some of them into non-indexed metadata in the process.

Namely, the vu and iter tags (which are disabled by default), so they’re stored and outputed, but not indexed as time series. As of the latest version of k6, the vu and iter systemTags are disabled and replaced by non-indexed metadata. It means that they will no longer be usable in thresholds, and various outputs may emit them differently or ignore them completely.

In practice, that means a script using systemTags, such as:

Before, this would have outputed the vu and iter tags as part of their output's data-point tags:

Now, k6 outputs them as metadata:

Finally, another minor change related to the time series is with URL grouping: when using this technique, the resulting url tag on the http_req_* resulting metric samples now equals the value of the name tag.

What’s next?

We're currently figuring out whether there are more use cases for this and what its design and behavior could look and feel like. We'd be keen to hear from you on that front, so please let us know if you have use cases for such a feature.