Modern applications are built on a microservices architecture that leverages cloud-native technologies.

This architecture has many benefits regarding scalability and fault-tolerance of individual components, but it also increases the complexity of the applications, mostly due to the interdependencies between services.

This complexity makes it difficult for engineers to fully understand how their applications will react to abnormal conditions, such as a dependency failure or performance degradation. Under these conditions, applications frequently fail in unpredictable ways.

According to a study of failures in real-world distributed systems 1, the vast majority of catastrophic system failures resulted from the incorrect handling of non-fatal errors, and, in nearly two-thirds of the cases, the resulting faults could have been detected through simple testing of error-handling code.

However, implementing tests that reproduce these conditions would be difficult and time-consuming. What's more, the resulting tests would likely be fragile and produce unreliable results.

Fortunately, it's unnecessary to write tests that reproduce specific abnormalities because "from the distributed system perspective, almost all interesting availability experiments can be driven by affecting latency or response type" 2.

In other words, we don’t need to kill instances or saturate resources expecting to generate the faults we want to test. We can instead inject changes in the latency and response type of requests between services to simulate situations from real incidents to validate whether the applications properly handle them.

In summary, what fault injection testing proposes is validating early in the development process if the applications can handle the conditions created by incidents in an acceptable way.

Fault injection testing with k6

Let’s examine how fault injection testing can be implemented using k6.

We will be using a fictional e-Commerce site that allows users to browse a catalogue of products and buy items.

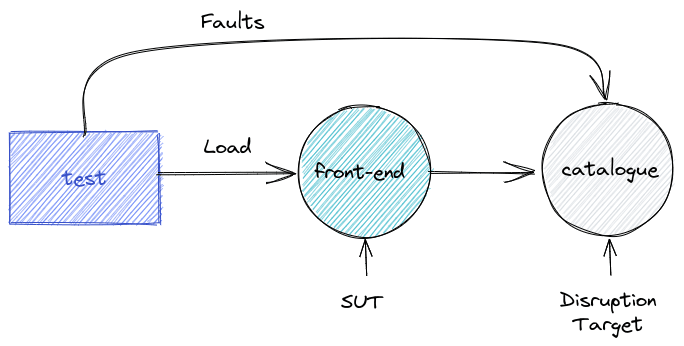

To analyze how the Front-end service will handle faults in the Catalogue service, the developers implement a fault injection test that applies load to the Front-end service while injecting faults in the requests served by the Catalogue service, as described in then following figure:

We are just going to skim over the code to show the more relevant aspects. You will find the code and the instructions for setup in the xk6-disruptor-demo repository.

We define the requestProduct function (1). This function makes an API request to the Front-end service for the details of a product. The function checks for any error in the response (2).

We also define a threshold of 97% for the rate of requests that pass the check. If the ratio of successful requests is below this threshold, the test fails. This is our SLO.

We then define the injectFault function that creates a ServiceDisruptor for the Catalogue service. The disruptor injects faults (3) that will return an error code 500 in 10% of requests served by the Catalogue service (4).

Finally we define two scenarios. The load scenario applies the load to the Front-end service, and the fault scenario injects the faults into the Catalogue service.

If we execute the test we get the following results:

We can see that only 89% of requests pass the check, missing the defined threshold of 97%, and therefore the test fails.

From these results, the frontend team can determine that they aren't handling the errors from the Catalogue service. More likely, they are not retrying failed requests as the fail ratio is close to the 10% of errors injected.

This shouldn't be surprising, given the conclusions of the study cited before: a vast majority of failures in distributed systems were caused by inappropriate handling of non-fatal errors, including missing error handling code.

The developers can then use this test to validate different solutions until they consistently obtain an acceptable rate of successful requests. For instance, implementing some retry mechanism for the requests.

The developers can also modify the test to reflect other situations, like higher error rates, different errors, or higher latencies in the responses, in order to fine-tune the solution and avoid issues such as retry storms.

Conclusions

There is significant room for improvement in the reliability of complex distributed applications by just testing the error-handling code.

But to be effective, fault injection testing must be integrated into the existing practices of testing:

- Be able to reuse existing load and functional tests

- Define the test conditions in terms that are familiar to developers: latency and error rate

- Ensure the test has a predictable effect on the target of the fault injection

- Coordinate fault injection from the test code

In Grafana k6 we are committed to making Fault Injection Testing practices accessible to a broad spectrum of organizations by building a solid foundation from which they can progress towards more reliable applications.

If you're interested in learning how to use xk6-disruptor to testing your application's resilience early in the development process, visit the project's documentation.

For the the code and the instructions to set up the sample application, go to the xk6-disruptor-demo repository.

References

1 Simple Testing Can Prevent Most Critical Failures: An Analysis of Production Failures in Distributed Data-Intensive Systems. Yuan et al. USENIX OSDI 2014

2 Chaos Engineering. Casey Rosenthal and Nora Jones. O’Relly Media.