I was invited to elaborate my position on recording in performance testing. Originally the discussion started as a comment to Ragnar Lönn's post Open Source Load Testing Tool Review published here earlier.

Then the discussion moved to my post The Role of Recording in Load Testing Tools.

Truly speaking, I was rather surprised by the discussion. From my experience, the need for general-purpose load testing tools to support recording is quite obvious. Many technologies generate so sophisticated HTTP requests that recording is practically the only option. The lack of advanced recording functionality automatically limit the areas where the tool may be used.

And recording here doesn't mean just plain recording – but also include all functionality needed to efficiently create a working script from it. It requires a lot of efforts to implement it properly. It is not surprising that it is usually a weak spot of most open source products (and many don't support it at all).

It doesn't mean, of course, that there is no space for tools that doesn't support recording or support it in a simplified way. Definitely there are numerous cases where programming or simple recording works – as we see from a number of such products on the market and the number of people using them. Of course, there is no need in more sophisticated tools when a simple one works for your system. However, if you need to test different systems (typical, for example, for corporate environments), you rather need all possible help you can get from the tool.

There are multiple aspects of recording. Support of different technologies is, of course, the first. Unfortunately, it happens often enough that there are issues with recording of sophisticated / new technologies even in mature products. There are cases when it just doesn't work. And there are cases where other approaches to create scripts may work better. Recording is definitely not the only approach and not necessarily always the best (although it was the mainstream approach to load testing until now – and, while we see more alternatives now, I suspect still is).

Actually I always suggested to use other approaches to scripting when recording is not optimal – I presented Custom Load Generation in 2001 (basically recommending to use API calls inside load testing tools) and then elaborated the topics across multiple presentations. However, all these alternative approaches have their own limitation - so now I want to highlight the power and importance of recording as it looks like it is sometimes missed nowadays.

It is often advised to use API for scripting. It is a good advice – if you have a stable API and you know exactly what calls are invoked for each user action (or when you are just testing APIs – which is a noble task by itself when complemented by testing of the whole product). Unfortunately, quite often it is not the case (we are not diving into the discussion here what product architecture and design should be - just stating the fact that it is not usually so straightforward in real life).

Even if you have a good REST API – recording allows you to see the actual sequence of requests and actual parameters used instead of trying to figure them out yourself from a scratch. As the client-side parts become more sophisticated (we rarely see simple HTML anymore), it is often not obvious at all. Actually, when I test products using APIs, I usually uncover a lot of issues with the recording – such as duplicate or unnecessary calls. Or that the calls actually used are different from what developers believed are used.

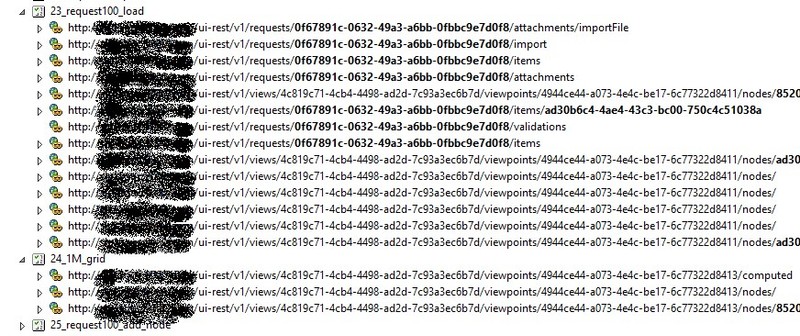

Here is a script for a product I worked with recently that use a REST API – but the "request load" user action in the picture below invokes 14 different requests and most are not easy to type manually (several are actually much longer and are just cut off on that picture).

Of course, it is not played back as is - but after it is properly correlated and parameterized (actually values in bold there means that they are parameterized).

Ability to group recorded requests is another important feature. For complex cases, it is import to have ability to create such groups and specify their names / necessary comments during recording. Unfortunately, some products don't provide such functionality – even if they group requests, they group them by some algorithm (say, delay between requests). While it may work in many cases – in cases when we have dozens and hundreds similar http requests (that may be typical for some frameworks), it becomes a major issue. Especially if we have delays processing some requests on the server (say, generating a large report) – so it gets broken into groups not matching business / user view (for example, requests preparing the report gets together with earlier action, but requests presenting the result gets into the next action).

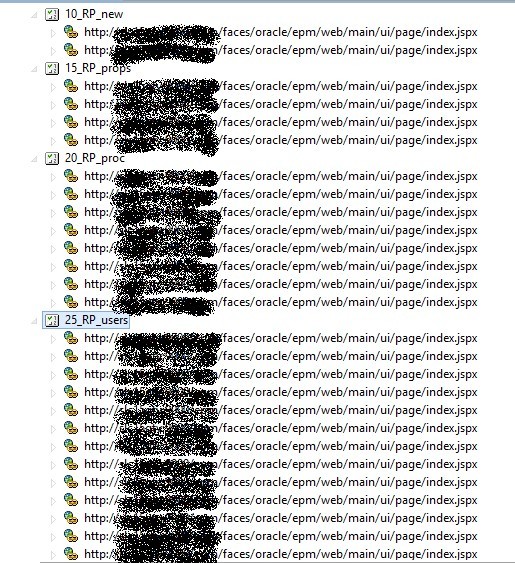

Here is another example – from another product I tested recently:

All individual http requests look exactly the same – and differ only in http body, which are not very helpful either even if you decode it. For example:

or

With some experience you may see some useful grains of information there – in the first one "search=user1" and "userPicker" may give you some ideas, in the second "RP_0001", the name of the report, may be noticed. But looking through hundreds of such requests trying to verify and correct request grouping is definitely not the best way to handle it. And the more complex script / technology is, the more difficult it will be to fix it manually later (although, of course, it doesn't appear to be an issue for simple scripts / technologies).

Another aspect, directly related to recording, is script correlation (parameterization of dynamic server responses). If you need to correlate more than a few variables, some automation of the process helps a lot. Yes, some versions of auto-correlation earned a bad reputation – sometimes creating more harm than really helping (although often due to using it blindly, without understanding). But there are different approaches here. Without a deep research here, we perhaps may separate:

- Rule-based correlation. You have libraries with specific rules where you explicitly specify what to correlate. It is rather straightforward and you completely control what happens (if a rule doesn't work, you either modify or disable it).

- Some built-in logic guessing what parameters to correlate - either just analyzing the script or analyzing playback and comparing it with the recording. As it uses a kind of AI, sometimes you need to spend more time fixing it (so I am not a big fan of that approach as I prefer to know exactly what is going on) - but it may work fine in simple cases. If it is combined with some interaction logic (for example, showing you what is changing and how it suggests to correlate it – and you tell it if you want to do such correlation) it may be very helpful.

A large number of correlations needed is rather typical for business applications. For example, looking on the HTTP request body above you may see context id f8d14971ef44cfda:-6f632f9c:16071eed866:-8000-00000000000059b9 which is returned by every request and used by the next request, so we basically have as many of such values as HTTP requests. So in every single request (and there are a few hundreds of them) we need to extract this context id and use it in the next request. The idea to do it manually request-by-request doesn't look appealing to me at all. All of them may be correlated by a single rule extracting it from server responses setContextId\('(.+?)'\).

So I strongly believe that general-purpose load testing tools must have powerful recording and correlation. Well, at least if they want to get into the corporate market. Yes, we have some niches for load testing tools without recording (and, considering the size of the whole market, these niches appears to be quite large). As well as there are ways to create load without using load testing tools at all – for example, by creating your own framework or re-directing real traffic. But not having recording limits the tool’s usage to simpler cases and make it practically useless for corporate IT (again, as a general-purpose tool – quite often several different tools are used due to different reasons, allowing use of more specialized tools).

It is not an ideal analogy, but let's compare load testing tools with screwdrivers. If you need to tighten one screw and you have a screwdriver with the tip that somewhat fits – you do the job and are pretty happy with your screwdriver. If you need to tighten different types of screws periodically, you either need different screwdrivers or a screwdriver with changing tips. If you need to tighten hundreds of screws per day, you probably need a power screwdriver. I am rather amused by reasoning like "you don't need a power screwdriver with changing tips as you still may do it with a simple screwdriver – and use pliers or hammer if tip don't fit". I am even more amused by reasoning like "if you get a power screwdriver, you will start to put screws in all wrong places because it is so easy" or "if you use power screwdriver, you forget how to do it manually and get muscle atrophy". Not that such things never happen – if kids get a power screwdriver, you may find quite a few screws in the wrong places – but I doubt that these are serious concerns in professional discussions amongst constructors.